Creating a "lie detector" for deepfakes

Deepfakes are phony videos of real people, generated by artificial intelligence software at the hands of people who want to undermine our trust.

The images you see here are NOT actor Tom Cruise, President Barack Obama, or Ukrainian President Volodymyr Zelenskyy, who in one fake video called for his countrymen to surrender.

These days, deepfakes are becoming so realistic that experts worry about what they'll do to news and democracy.

- The impact of deepfakes: How do you know when a video is real? ("60 Minutes")

- Synthetic Media: How deepfakes could soon change our world ("60 Minutes")

But the good guys are fighting back!

Two years ago, Microsoft's chief scientific officer Eric Horvitz, the co-creator of the spam email filter, began trying to solve this problem. "Within five or ten years, if we don't have this technology, most of what people will be seeing, or quite a lot of it, will be synthetic. We won't be able to tell the difference.

"Is there a way out?" Horvitz wondered.

As it turned out, a similar effort was underway at Adobe, the company that makes Photoshop. "We wanted to think about giving everyone a tool, a way to tell whether something's true or not," said Dana Rao, Adobe's chief counsel and chief trust officer.

Pogue asked, "Why not just have your genius engineers develop some software program that can analyze a video and go, 'That's a fake'?"

"The problem is, the technology to detect AI is developing, and the technology to edit AI is developing," Rao said. "And there's always gonna be this horse race of which one wins. And so, we know that for a long-term perspective, AI is not going to be the answer."

Both companies concluded that trying to distinguish real videos from phony ones would be a never-ending arms race. And so, said Rao, "We flipped the problem on its head. Because we said, 'What we really need is to provide people a way to know what's true, instead of trying to catch everything that's false."

"So, you're not out to develop technology that can prove that something's a fake? This technology will prove that something's for real?"

"That's exactly what we're trying to do. It is a lie detector for photos and videos."

Eventually, Microsoft and Adobe joined forces and designed a new feature called Content Credentials, which they hope will someday appear on every authentic photo and video.

Here's how it works:

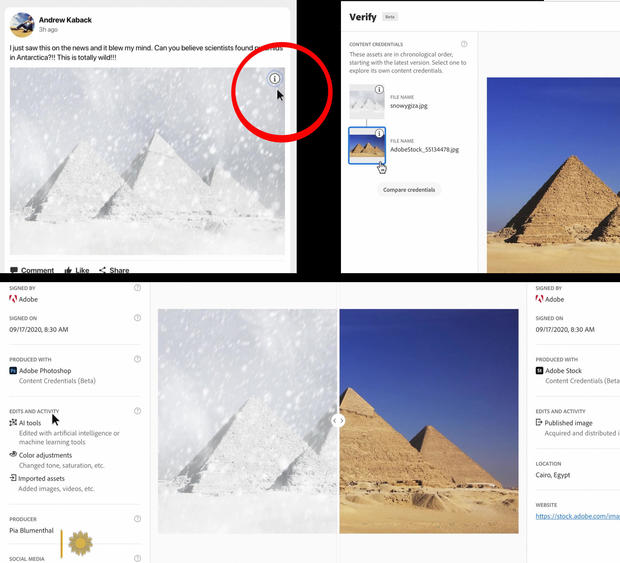

Imagine you're scrolling through your social feeds. Someone sends you a picture of snow-covered pyramids, with the claim that scientists found them in Antarctica – far from Egypt! A Content Credentials icon, published with the photo, will reveal its history when clicked on.

"You can see who took it, when they took it, and where they took it, and the edits that were made," said Rao. With no verification icon, the user could conclude, "I think this person may be trying to fool me!"

Already, 900 companies have agreed to display the Content Credentials button. They represent the entire life cycle of photos and videos, from the camera that takes them (such as Nikon and Canon), to the websites that display them (The New York Times, Wall Street Journal).

Rao said, "The bad actors, they're not gonna use this tool; they're gonna try and fool you and they're gonna make up something. Why didn't they wanna show me their work? Why didn't they wanna show me what was real, what edits they made? Because if they didn't wanna show that to you, maybe you shouldn't believe them."

Now, Content Credentials aren't going to be a silver bullet. Laws and education will also be needed, so that we, the people, can fine-tune our baloney detectors.

But in the next couple of years, you'll start seeing that special button on photos and videos online – at least the ones that aren't fake.

Horvitz said they are testing different prototypes. One would indicate if someone has tried tampering with a video. "A gold symbol comes up and says, 'Content Credentials incomplete,' [meaning] step back. Be skeptical."

Pogue said, "You're mentioning media companies – New York Times, BBC. You're mentioning software companies – Microsoft, Adobe – who are, in some realms, competitors. You're saying that they all laid down their arms to work together on something to save democracy?"

"Yeah - groups working together across the larger ecosystem: social media platforms, computing platforms, broadcasters, producers, and governments," Horvitz said.

"So, this thing could work?"

"I think it has a chance of making a dent. Potentially a big dent in the challenges we face, and a way of us all coming together to address this challenge of our time."

For more info:

Story produced by John Goodwin. Editor: Ben McCormick.

More from David Pogue on artificial intelligence:

See also:

- New software designed to help media detect deepfakes – but it's just a "drop in the bucket" ("CBS This Morning")

- Facebook bans "deepfake" videos, with exceptions

- Cheerleader's mom accused of making "deepfake" videos of daughter's rivals

- CU Denver helps Pentagon battle the threat posed by deepfakes

- "Emotional skepticism" needed to stop spread of deepfakes on social media, expert says ("CBS This Morning")